Stuff That Gets You Interested !

AI, Image Analysis and Convolutional Neural Networks.

Analysts! After the overwhelming response received for our introductory blog here we are (quite ahead of schedule) with the very first blog of the first block. It covers recent technological advancements and applications of data science and machine learning. Before you start reading we must inform you that you should continue reading only if you have a basic understanding of the commonly used data-mining and machine learning techniques.

This article would actually go slightly above the beginner level at this point of time. However this is just a brief overview and not a tutorial. For readers who are new to data science or machine learning algorithms, do not worry ‘cause in two weeks’ time we will be uploading the very first data science session with Introduction to R and basic R programming overview.

Now this is the buzz-word of

our generation and perhaps for generations to come. What is it that you keep

listening, hearing or reading in the technology industry? What is it that

entire industry is adapting to? Well it has varied names nowadays like

automation or machine learning but commonly you would have heard of it as

Artificial Intelligence (AI).Yes AI. And today we’re going to talk about how AI

is being used by technology giants like Google and Microsoft for Image

Analysis.

Before we start how image analysis is

done using AI algorithms let’s talk about image analysis first. Shall we?

Image Analysis ranges from finding

basic shapes, detecting edges, removing noise and counting objects to finding

anomalies in routine. Security and surveillance are incomplete without an

intelligent system that can predict pedestrians and vehicles, and make a

difference. Let us hear about it from someone who is currently making huge

strides in this field.

Uncanny Vision (http://www.uncannyvision.com/), a startup delivering

image analysis, tells us how it happens. Their system identifies objects in

images using an analytical system powered by deep learning( we’ll have an

entire blog about it next week) algorithms. The deep learning framework Caffe

powers this system. The framework managed by Berkley Vision and Learning

Center (BVLC) is capable of processing more than 60 million images in a single

day using one NVIDIA K40 GPU. On an average this amounts to 695 images per

second. Compare it with the fact that we only need 17 to make a series of

images into a video, with HD video going up to 60.Basically, this system can

analyze about 11 HD videos at once!

The algorithm mostly used for image

analysis is undoubtedly Artificial Neural Networks or ANNs. We’ll not be

talking about ANNs here as we told you this article is a bit above the beginner

level. What we’ll talk about is their upgradation for image analysis. Undoubtedly

neural networks can identify and recognize patterns and do a lot of interesting

stuff but when we talk about real-time image analysis from multiple angles and

lack of content in the frame, the capabilities of ANNs are limited. This is

where convolutional neural networks (CNNs) come in.

A CNN consists of three steps. Start

from convolution from your engineering lectures where we convolved a filter

with input function to get some output. Padding was then added to make this

result similar in size to the original image. You know the basics right? Pooling

is subsequently done to reduce the size of data. Then, the networks use fully-connected

layers where each pixel is considered a single neuron similar to regular neural

network. Batch normalization is the final step in the process which honestly

has become outdated with use.

This video by Brandon Rohrer will help you understand CNNs:

Let’s talk about some other techniques

for image analysis. These algorithms can be as simple as binary trees to more

complex ones like decision trees. Decision tree for example is used by

Microsoft Structured Query Language (SQL) server. Never thought analytics would

be used in SQL but when you think about how data is fetched in SQL server it

makes a lot more sense (Data Warehousing is an entirely different aspect that

deals with the storage of data in relational databases for data-mining). Decision

tree is a predictive modelling approach used in statistics ,data mining and

machine learning. Some popular implementations are IBM SPS Modeler, RapidMiner,

Microsoft SQL Server, MATLAB and the programming language R, among others. In

R, decision trees use a complexity parameter to measure trade-offs between

model complexity and accuracy on training set. A smaller complexity leads to

bigger tree and vice versa.

Below: Perhaps the most common example used for explaining decision trees

Below: Perhaps the most common example used for explaining decision trees

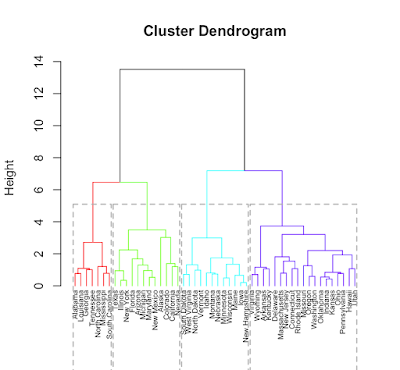

Another famous technique is cluster

analysis which categorizes objects in test-data into different groups or

clusters. Test data may be grouped into clusters using any number of parameters

hence there can be multiple algorithms for cluster analysis. Formation of

clusters is however not successful for current data analysis need. With

introduction of Internet of Things (IoT) data that needs to be analyzed are in

much higher volume. Here many methods fail due to the curse of dimensionality, resulting

in many parameters being left out while optimizing the algorithm.

Below: Hierarchical clustering dendrogram in R

Below: Hierarchical clustering dendrogram in R

So after seeing how these different

algorithms can be used for image analysis we find that Neural Network is way

ahead of others in terms of accuracy and speed but maybe a bit behind in terms

of complexity but that is a trade-off you are willing to make when performance

is an utmost concern. Neural Networks also demand higher hardware support for

implementation when compared to other algorithms. Believe it or not the neural

networks have existed since 1990s but their success was limited due to

restricted availability of proper hardware.

Movidius Myraid 2,Eyeriss and Microsoft HoloLens are some vision microprocessors being used in today’s vision processing systems. These are different from regular video-processing units as they are suited to running machine vision algorithms such as CNNs. Some applications of vision processing include robotics, IoT, digital cameras and smart cameras.

Movidius Myraid 2,Eyeriss and Microsoft HoloLens are some vision microprocessors being used in today’s vision processing systems. These are different from regular video-processing units as they are suited to running machine vision algorithms such as CNNs. Some applications of vision processing include robotics, IoT, digital cameras and smart cameras.

After all this exhausting tech-talk

about neural networks and image analysis let’s take a seat back and look at the

future we are about to witness. In words of Dr. Reger CTO of Global Business at

Fujitsu ”After logic programming with languages like Prolog and expert systems,

the present phase of advanced neural network consists of the third wave of AI”.

But we are still decades away from a system that can get close to a realistic

one. This brilliance of human body is the reason why researchers have been

trying to break the enigma of computer vision by analyzing visual mechanics of

human beings or other animals.

Again we would like to end our blog

with the words of Dr.Reger “Neural

Networks in general and image analysis in particular will remain some of the

most interesting and important topics in cutting-edge information technology in

years to come.” As fellow machine learning lovers, we surely hope they do.

Comments

Post a Comment