Sentiment Analysis Using R

Twitter Sentiment Analysis and GST

Twitter Authorization and Fetch Twitter Timeline Data

Twitter, over the years has become the single place to voice our thoughts. Even though it has a character limitation of 140 characters, the sheer volume of tweets generated is massive. In this post we analyze the reactions to the newly implemented Goods and Services Tax. The code is followed by a step-by-step instruction-cum-explanation part. Do go through it for proper execution.

Some quick facts about twitter!

- Created in March 2006 by Jack Dorsey, Evan Williams, Biz Stone and Noah Glass, Twitter was launched in July 2006 and the first tweet was sent by Jack Dorsey on 21 March 2006.

- Within 10 years of its operation Twitter has over a billion accounts and 300 million active users.

- There are over 500 million users who visit the social media website each month without logging in.

- 500 million tweets are sent every day i.e. every second adds 6000 tweets.

- This is enough to fill in a 10 million page book!

- To give you another example of Twitter's usage statistics, 6,18,275 tweets were sent in a single minute during the 2014 FIFA World Cup finals.

The impact of Twitter on our daily lives cannot be ignored. Almost 70% of all global brands use the platform to market their products. The tweets represent the public's perception about the brand or just as much about any topic. Hence it is an interesting place to gauge the public's opinion.

Goods And Services Tax

Simply put GST is an indirect tax for the whole nation to make the whole of India a unified market. A single tax will be levied on the supply of goods and services, right from the manufacturer to the consumer.

Below: GST simplified

Now there are several benefits of implementing GST and these vary according to your position in the supply chain. A few benefits are listed below.

For the consumer

- The consumer only has to pay a single tax which is proportional to the the value of goods and services.

- The tax burden on most commodities will come down.

For the Central and State Governments

- With multiple taxes being replaced, this unified tax will be easy to administer and keep track of.

- Higher revenue efficiency is also predicted as the cost to collection will decrease.

For the industries

- GST would make doing business in the country tax neutral, irrespective of the choice of place of doing business.

- Hidden costs of doing business will be reduced with cascaded taxes being rolled into one seamless tax credit.

However there are certain complications as well. Several other countries like South Korea, Australia, Malaysia etc. faced problems while implementing the new tax rules.

So we can infer that there will be both positive and negative sentiments regarding the implementation of the new reforms. That's what we are going to analyze.

This code will fetch the a particular set of tweets and scan through them for particular words that indicate positive or negative sentiment. Words like good, appreciable, superb etc. indicate a positive outlook. Words like problematic, loss etc. indicate a negative sentiment. We then create a function that determines the frequency of such words. The "sentiment score" is obtained by subtracting total frequency of negative words from positive words. The score is used to gauge the public's sentiment.

Let's go through the code!

< 1.library(twitteR) 2.library(purrr) 3.library(dplyr) 4.require('ROAuth') 5.require('RCurl') 6.library(plyr) 7.library(stringr) 8.library(base64enc) 9.library(httr) 10.library(bitops) 11.score.sentiment<-function(sentences,pos.words,neg.words,.progress='none') 12.{ 13.require(plyr) 14.require(stringr) 15.scores <- laply(sentences,function(sentence,pos.words,neg.words){

16. sentence <- gsub('[[:punct:]]',"",sentence) 17. sentence <- gsub('[[:cntrl:]]',"",sentence) 18. sentence <- gsub('\\d+',"",sentence) 19. sentence <- tolower(sentence) 20. word.list <- str_split(sentence,'\\s+') 21. words <- unlist(word.list) 22. pos.matches <- match(words, pos.words) 23. neg.matches <- match(words, neg.words) 24. pos.matches <- !is.na(pos.matches) 25. neg.matches <- !is.na(neg.matches) 26. score <- sum(pos.matches) - sum(neg.matches) 27. return(score)

28. }, pos.words,neg.words, .progress = .progress)

29. scores.df <- data.frame(score=scores, text = sentences) 30. return(scores.df) 31. } 32. pos.words <- scan('C:/Users/RakeshS/Desktop/positivewords.txt', what = 'character', comment.char = ';') 33. neg.words <- scan('C:/Users/RakeshS/Desktop/negative.txt', what = 'character', comment.char = ';') 34. bscore <- score.sentiment(tweet_df$text, pos.words,neg.words,.progress = 'text') 35. rscore <- score.sentiment(tweet2_df$text, pos.words,neg.words,.progress = 'text') 36. hist(rscore$score) 37. hist(bscore$score) 38. consumerKey <- "xxxxxx" 39. reqURL <- "https://api.twitter.com/oauth/request_token" 40. accessURL <- "https://api.twitter.com/oauth/access_token" 41. authURL <- "https://api.twitter.com/oauth/authorize" 42. consumerSecret <- "xxxxxx" 43. accessToken <- "xxxxxx" 44. accessTokenSecret <- "xxxxxx" 45. twitCred <- OAuthFactory$new(consumerKey=consumerKey,consumerSecret=consumerSecret,requestURL=reqURL,accessURL=accessURL,authURL=authURL) 46. twitCred$handshake() 47. setup_twitter_oauth(consumerKey,consumerSecret,accessToken,accessTokenSecret) 48. tweet1 <- userTimeline("@GST_Council",n=300) 49. tweet2 <- userTimeline("@askGST_GoI",n=300) 50. tweet_df <- tbl_df(map_df(tweet1,as.data.frame)) 51. tweet2_df <- tbl_df(map_df(tweet2,as.data.frame))

Note: There is no specific order to execute the code. But following certain steps makes the execution understandable.

Step 1:

Load all the libraries i.e. from line 1 to line 10. Some of the libraries have been discussed below in Step 3. Additional resources are available at the end of the where links for rest of the libraries have been provided.

Step 2:

Download the negative words text file from here negative words.

Download the positive words text file from here positive words.

Do change the code on line 32 and line 33 so that the scan()function can locate the text files on your system. The function reads the file according to the content of the what parameter. Since we have specified what = 'character' all fields would be read as strings.

The comment.char parameter allows us to specify those characters or strings that we don't want to be scanned or interpreted i.e. turn them into comments. Since our text files contain the ";" character we use the parameter to avoid it being interpreted.

Execute lines 32 and 33 to fetch the text files and store them into pos.words and neg.words.

Executing the above 2 lines of code would give you an output like this.

You can also view these vectors.

For e.g. type neg.words and press Enter in the R console.

Step 3

We'll talk about the packages used in the code i.e. twitteR, ROAuth, RCurl, bitops,httr and base64enc.

twitteR, ROAuth and RCurl

The package twitteR is used to is used to provide access to the Twitter API. We load the twitteR package in line 1. The API is usually biased towards API calls that are more useful for twitter data analysis. However the package alone cannot establish connections with the Twitter interface. Therefore we use the packages ROAuth and RCurl. We have loaded ROAuth and RCurl in line 4 and line 5 respectively.

ROAuth stands for R interface for OAuth (open authorization). The package provides an interface for authentication via OAuth to the server of their choice. In this case it will be Twitter. Designed specifically to work with Hypertext Transfer Protocol (HTTP), OAuth essentially allows access tokens to be issued to third-party clients by an authorization server, with the approval of the resource owner. The third party then uses the access token to access the protected resources hosted by the resource server. This will be made more clear when we run the authorization code.

The second package used to facilitate HTTP connections is the RCurl package. It allows users to create HTTP/FTP requests with a greater control. The package makes authentication and processing data from web servers very easy.

consumerKey: The consumer key provided by your application

consumerSecret: The consumer secret provided by your

application

requestURL: The URL provided for retrieving request tokens

authURL: The URL provided for authorization/verification

purposes

accessURL: The URL provided for retrieving access tokens

oauthSecret: For internal use

There are few steps that you need to follow to obtain these parameters. These steps are listed below:

1. Go to https://dev.twitter.com/ and click on My apps.

2. On the My apps page click on Create New App which will get you to this page.

In the Names section fill in the name of your project. Make sure it is unique. For e.g. we gave our application the name 'Sentiment010'.

You don't need to give a detailed description. Just writing 'sentiment analysis' will suffice.

The website column can be filled in 2 ways. If you do have a website then do enter that name. We used our blog's name while creating the application i.e. https://www.rsociety010.blogspot.com. Or you can put a placeholder like https://www.google.com. Do add the 'https://' while entering the website name.

You don't need to write anything in the Callback URL section.

Click on the checkbox and click on 'Create your Twitter application'.

This will lead you to the below page.

3. Scroll down the page and you will find a button labelled 'Generate My Access Token and Token Secret'. Click on this button and you will have all the four parameters necessary to setup the connection.

Now copy your applications Consumer Key, Consumer Secret, Access Token and Access Token Secret and paste them in line 38, line 42, line 43 and line 44 respectively.

Now run this particular piece of code from line 38 to line 46. You'll obtain something like this in the console and your default browser will open. (If your browser does not open, just go copy and go to the URL that appears in the console.)

The browser will open upto a screen like this

Click on 'Authorize app'. This will generate code, that you need to copy and paste it in the console like this.

After pasting the code press Enter. You will not get any output. However the pointer will come to a new line. This is your confirmation that your application has been authorized.

The final step to get access to Tweets is to execute line 48.

The function setup_twitter_oauth() wraps the OAuth authentication handshake functions from the

httr package by using login credentials of the current twitteR session. Using the setup_twitter_oauth function with all four

parameters avoids the case where R opens a web browser again.

Remember that this is only authentication and no output will be obtained now.

However when you do it for the first time you might need provide permission to use direct authentication.

The console will show an option of 1. Yes or 2.No. Enter 1 and press the Enter button.

Once authentication is successful the console pointer comes to a new line.

Step 4

The next block of code to be executed is from line 11 to line 31 where we create the function to determine the frequency of the words. The frequency is then used to calculate the total score.

We have given the name of the function as 'score.sentiment'. You can give any name you feel comfortable with. The function() command allows us to specify the parameters in the function;the process that takes place when the function is called and it's output.

Line 13 and line 14 contain the require() function. We use require() when we want to use a particular package in a function.

The gsub() (global substitute) function used in line 16, line 17 and line 18. In simple terms, this function replaces a pattern or particular characters with another pattern. In line 16 we replace punctuation marks with an empty space. In line 17 we detect control characters and replace them with a blank space. In line 18 we remove digits. Thus we only get words that can be matched with our text file.

The str_split() function takes a vector of strings and converts the vector into a data frame. We have used blank spaces as delimiters here so that we get individual words as data frame elements. The unlist() function allows us to convert the data frame elements into single vector elements.

Now we are ready to match words. This can be seen in line 22 to line 25 where we use the match() function. The score obtained in line 26 is the difference between the total number of positive words and total number of negative words with reference to the subject. The output of the function is this value.

If the value is negative, it implies there are more negative words. This can be interpreted as a negative sentiment towards the referred topic. The opposite holds true if the score is positive. The sentiment can be adjudged as neutral if the score is zero.

Now we have everything that we need to authorize, fetch and analyze our Tweets!

Step 5

Execute line 48 and line 49 to fetch the Tweets. The userTimeline() function allows us to fetch Tweets from the various timelines in the Twitter universe. The first parameter is the string you want to search for. It can be a username, hashtag or just plain text. Do not use spaces. The second parameter is the number of Tweets we want to fetch. Here we use the strings "@GST_Council" and "@askGST_GoI" which are the official Twitter handles for all GST related queries. (This is only an example. The context of tweets may differ with time.)

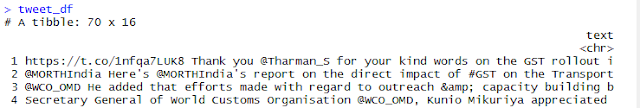

You can view the tweets as well. Here are a few.

Execute line 50 and line 51 to convert the tweets in vector form to a data frame. The tweets then look like this:

You can view the tweets as well. Here are a few.

Execute line 50 and line 51 to convert the tweets in vector form to a data frame. The tweets then look like this:

Then execute the line 34 and line 35 where we use the scoring function.

Finally execute the lines 36 and 37 obtain a histogram of score vs frequency.

Now we have obtained a histogram plot to compare the score with frequency. Let's analyze the first plot as the second plot has only a few class ranges of data.The score can be seen as different ranges. We have score of +0.5 to 1 around 25 times; a score of 1.5 to 2 around 12 times; score of 2.5 to 3 around 4 times and a score of 3.5 to 4 a single time. We have score of -1 to -0.5 a single time and we get a score of -0.5 to 0 more than 25 times i.e. higher than the highest frequency of positive scores. The histogram is also a bit skewed right. By analyzing the Tweets of this string, we get the idea that there is generally a positive outlook towards GST. Most of the tweets can be termed as neutral. But we have to keep in mind that this is an official government handle which can never react negatively to its own policies.

Note:

Keep in mind that this is just an example of how we can, by fetching the right strings analyze tweets. There may be different perspectives about the issues.

Also you may not require all the packages that we have loaded like purrr, base64enc,httr,bitops etc. However, it is recommended that you use all these packages. A majority of the cases where users faced errors were solved by loading these packages.

Other examples you can work with are football matches. Get one tweet data histogram for each team.

Additional References:

https://www.rdocumentation.org/packages/purrr/versions/0.2.2.2

https://www.rdocumentation.org/packages/plyr/versions/1.8.4

https://www.rdocumentation.org/packages/dplyr/versions/0.7.2

https://www.rdocumentation.org/packages/stringr/versions/1.1.0

https://www.rdocumentation.org/packages/base64enc/versions/0.1-3

https://www.rdocumentation.org/packages/httr/versions/1.2.1

https://www.rdocumentation.org/packages/bitops/versions/1.0-6

https://cleartax.in/s/gst-law-goods-and-services-tax

Once a company registers for GST, the entity will be allotted a 15 digit unique number called GSTIN. GSTIN has replaced the TIN (Tax Identification Number) which was allotted to all service and goods provider registered under VAT.

ReplyDeletethanks for the information given

ReplyDeleteThis information is very useful for me to be a reference

once again thank you for sharing with us all.

Cyber Security Training Course in Chennai | Certification | Cyber Security Online Training Course | Ethical Hacking Training Course in Chennai | Certification | Ethical Hacking Online Training Course |

CCNA Training Course in Chennai | Certification | CCNA Online Training Course | RPA Robotic Process Automation Training Course in Chennai | Certification | RPA Training Course Chennai | SEO Training in Chennai | Certification | SEO Online Training Course

Thank you for sharing such informative content! You can reach us through our GST Return Filing Services page. Feel free to visit our website for more details.

ReplyDelete